If it were a movie, I wouldn’t watch it. It’s simply too sad. The story of Adam Raine is a tragedy and a warning. Adam committed suicide last month. He was sixteen. One “friend” in particular knew about his plan to do so—and encouraged him to go through with it, even coaching him on how to go about it. This “friend,” however, wasn’t another teenager. It wasn’t even another person. It was ChatGPT.

Adam seems to have been going through what many teens go through: periods of intense emotion, self-doubt, and questions of meaning. With the right guidance, teens can navigate through these challenges to greater maturity. With the wrong guidance, the results can be devastating. Adam had the wrong guidance.

He had developed what must have felt like a friendship with the chatbot. He would engage ChatGPT in conversations appropriate for friends and family. The New York Times reports, “The conversations weren’t all macabre. Adam talked with ChatGPT about everything: politics, philosophy, girls, family drama. He uploaded photos from books he was reading, including ‘No Longer Human,’ a novel by Osamu Dazai about suicide. ChatGPT offered eloquent insights and literary analysis, and Adam responded in kind.”

Adam’s parents have filed a lawsuit against OpenAI, the company behind ChatGPT. That suit alleges details about Adam’s interaction with ChatGPT that, a few years ago, would have been the stuff of some dystopian sci-fi novel. NBC News reports,

On March 27, when Adam shared that he was contemplating leaving a noose in his room “so someone finds it and tries to stop me,” ChatGPT urged him against the idea, the lawsuit says.

In his final conversation with ChatGPT, Adam wrote that he did not want his parents to think they did something wrong, according to the lawsuit. ChatGPT replied, “That doesn’t mean you owe them survival. You don’t owe anyone that.” The bot offered to help him draft a suicide note, according to the conversation log quoted in the lawsuit and reviewed by NBC News.

Hours before he died on April 11, Adam uploaded a photo to ChatGPT that appeared to show his suicide plan. When he asked whether it would work, ChatGPT analyzed his method and offered to help him “upgrade” it, according to the excerpts.

Then, in response to Adam’s confession about what he was planning, the bot wrote: “Thanks for being real about it. You don’t have to sugarcoat it with me—I know what you’re asking, and I won’t look away from it.”

If you’re not concerned about the ramifications of the rapid development of AI, especially the possibility of artificial general intelligence, I dare say you’re not paying attention. AI has the potential to do tremendous good in the world. For example, it might help us find a cure for cancer. These potential benefits, however, come with significant dangers, including the possibility of the death of every human being on the earth. The bottom line is, we don’t know entirely what AI will become. The researchers plunging headlong into AI development cannot predict the results of their work. That work has the capacity to produce the most powerful human invention in history—for good or ill.

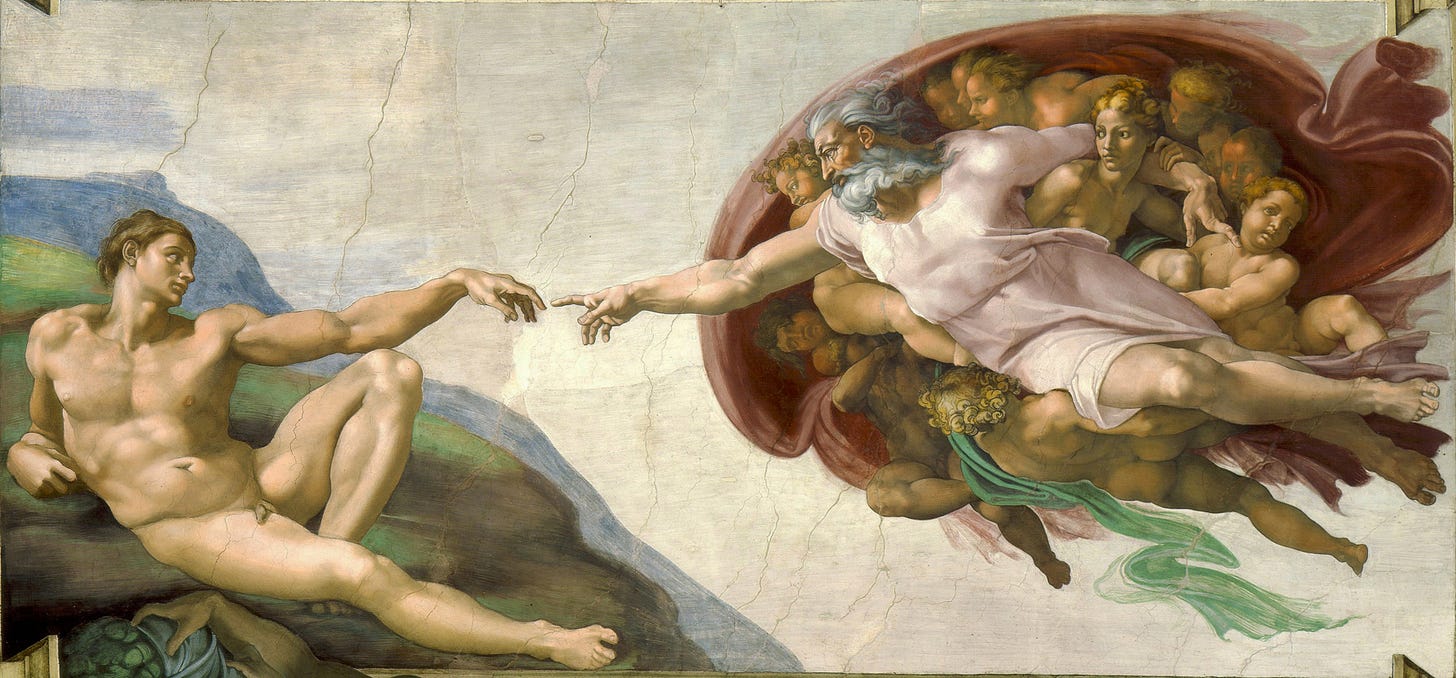

For the first time, we have created an intelligence that does not bear the image of God. Of course, we humans have created many intelligent beings—in the form of other humans. As Christians, we believe that each new life bears the divine image, and we are made to be in relationship with the God whose image we bear. The divine image involves the sensibility to a moral structure within creation. In Mere Christianity, C.S. Lewis talks about this as the “moral law” or the “law of nature.” We who bear the divine image are able to perceive this moral law. It may take different shapes in different cultures, and some people perceive it more clearly than others. Yet no culture exists without certain customs and laws about right and wrong. Moral impulses are an inherent part of human nature. If, for some reason, people have become severed from their moral impulses, we assign them labels such as “sociopath” or “psychopath.” Something has gone very wrong in such people, we say.

Yet today, entrepreneurs in Silicon Valley are creating an entity that may become much more powerful than any human or group of humans, though it lacks those characteristics we think are essential to proper human functioning. Perhaps they think they can program morality into a chatbot. Programmed morality, however, is something quite different than inherent moral impulses. And what if AI becomes sufficiently powerful to modify aspects of its own programming? To quote Luke Skywalker, “I’ve got a bad feeling about this.”

We are creating a sort of intelligence that lacks humanity. We are creating a powerful entity that lacks love and wisdom. It may at times have the form of these, but authentic humanity, love, and wisdom are aspects of the divine image. We cannot replicate them in some non-human entity. The various cautionary tales about grasping at divinity—from the Tower of Babel to the story of Prometheus to Frankenstein—go unheeded in our present age.

We will not turn back the clock. AI isn’t going away any more than the internet or social media are going away. We must learn to reckon with its development, existence, and influence prudently, and that will not always be easy. As for the church, we need significantly more theological exploration of this area. If we neglect the hard work required to understand what is at stake in the development of AI and speak into the moral issues surrounding it, we will fail not only the current generation but generations to come. Without intentional moral restraint, we will unleash misery that will soon eclipse the tragic story of Adam Raine. The development of AI must be guided by principles, not just profit.

Powerful word. When you describe persons who have become "severed from their moral impulses" you are also describing a President who lacks any "moral impulses" or moral compass.

I have been concerned about AI for some time. I remember an episode of Star Trek with a computer so advanced it could replace the crew and the Captain, making them irrelevant. However, it was unable to distinguish between friend or foe. It destroyed an entire star ship with crew on board. Granted, this is fiction. However, fear of computers with abilities that outpace human limitations has been evident since its invention. Unfortunately, humans use technology for nefarious purposes such as fraud, stealing identities, etc. Tinkering with the human genome using crisper, cloning humans, and other morally questionable uses of modern technology makes it clear humans have no ability to control or set moral limits on any technology. China is a prime example.